Ensuring the cybersecurity of information systems and associated networks has always been challenging. Serious vulnerabilities are identified on a regular basis and new threats continue to emerge to exploit those vulnerabilities. Industrial systems share many of the same vulnerabilities and are subject to the same threats. However, the consequences may be very different and, in some cases, more severe. This makes cybersecurity an imperative for the asset owner, who ultimately must bear the consequences of an adverse event.

The threat is ongoing and evolves constantly, so cybersecurity should not be viewed as a one-time “project” with a defined beginning and end. Since there is no such thing as being fully secure, the preferred approach should be ongoing, similar to the approach used for safety, quality, and other performance-based programs.

Similarly, it is not sufficient to focus on specific elements. Instead, asset identification and management, patch management, threat assessment, and so on are all parts of a broader response that must address all phases of the life cycle.

This response begins with identifying principal roles and assigning responsibilities and accountability for each stage of the system life cycle. With these addressed, the well-established systems engineering discipline can provide effective tools and methods to help define, plan, and conduct the response.

Applying such a formal discipline may be daunting for those not skilled and experienced in this area, but guidance and reference material are available from organizations such as NIST and INCOSE. Service providers and consultants can also provide more specialized expertise if required.

The imperative to make substantial improvements in the cybersecurity of industrial control systems and associated networks is well established. The risk to these systems changes regularly as new threats and vulnerabilities are identified. While the potential consequences may be different for industrial systems, they share many of the same vulnerabilities and are subject to the same threats as information systems that use the same or similar technology.

While not all cyber-attacks are deliberate and intended, the potential consequences are often serious. Monitoring, control, and safety systems play a critical role in process manufacturing, discrete manufacturing, and virtually all industries that are part of the critical infrastructure. This makes cybersecurity an imperative for the asset owner, who ultimately must bear the consequences of an adverse event.

The typical response to cybersecurity risk has been inherently reactive, involving one or more specific, focused initiatives. For example, asset owners isolate, patch, or otherwise protect installed systems; while system, product, and service suppliers provide and validate patches for identified vulnerabilities. Certainly, these measures are required when first addressing the problem, but by themselves, are insufficient over the long term. Individual initiatives (e.g., asset identification, patching, isolation, etc.) are often not adequately coordinated and, in some cases, may include implicit assumptions that must be challenged.

Some people assume that the response to cybersecurity threats should be structured as a project, implying that it has a well-defined end or outcome. This is incorrect, as it assumes that there is a problem that can be “solved” in a foreseeable timeframe. A more realistic approach is to view cybersecurity as similar to safety, quality improvement, and other performance-based programs. Security is not an absolute objective, but rather an attribute of systems and technology that must be monitored and managed with continuous improvement in mind.

Taking a project approach can also oversimplify the nature of the challenge. For example, the absence of accurate inventory records can lead to a singular focus on asset discovery and identification. While it is true that you cannot secure devices that you are not aware of, this is but one early step in a more comprehensive response. While a single database or repository that contains the inventory of all systems across an enterprise could help, this is often not feasible and may not be necessary if there is adequate access to and sharing of information in separate data-bases.

Another example of oversimplification is a singular focus on patch management. While it is certainly a worthwhile goal to keep systems at current patch levels, there are situations where this is impractical, or even impossible. The use of compensating controls such as isolation devices is an acceptable strategy for such situations. The goal must be to implement a patching protocol that keeps as many systems as possible at current software revision levels, while accommodating situations where this is not possible.

Confusion and, in some cases, conflict related to the disciplines or organizations best positioned to address cybersecurity is another persistent theme that limits real progress. The persistent “IT versus OT” discussion has been going on for years, often driven by bias and lack of trust between the respective organizations. The reality is that no single organizational response or model is best for all situations; a successful response to cybersecurity risk typically requires experience and expertise from several disciplines. This includes but is not limited to engineering, information security, and network design. The focus must be on assembling a program that includes all these disciplines, working against a coordinated plan.

In some situations, the necessary skills and expertise may not be readily available. Asset owners may not have internal experts in areas such as network design and monitoring or system configuration and may rely heavily on a third party.

The traditional response also includes developing or defining formal processes for each aspect of the cybersecurity response. These must include clear assignment of responsibility and accountability in areas such as change management, system monitoring, and performance reporting. Defining and documenting formal processes is not enough. Audits commonly find situations where the processes have been defined, but people don’t follow them. It is also essential to have clear measures or metrics to determine the effectiveness of these processes and make any improvements required.

While all the above are valid components of the cybersecurity response, none are sufficient by themselves. A comprehensive program must include many if not all these essential elements, along with other supporting elements.

Although there are many possible approaches to addressing cyber risk, several common elements provide the foundation of any comprehensive program.

It is a fundamental principle that security is required across the entire life cycle of products, systems, and solutions. The life cycle concept is well established and has been referenced in international standards such as IEC 24748 (Systems and Software Engineering – Life Cycle Management, Guidelines for Life Cycle Management). This standard defines a system as a combination of interacting components organized to achieve one or more stated purposes. These components can be any combination of hardware, software, and supporting data. In all but the simplest situations, this concept is extended to define a system of interest com-posed of multiple related systems. This is generally described as a “system of systems.”

Systems and their components all have a specific life cycle, evolving from initial conceptualization through eventual retirement. Fully addressing security requires addressing each life cycle phase by defining the accountability and responsibilities of each of several principal roles.

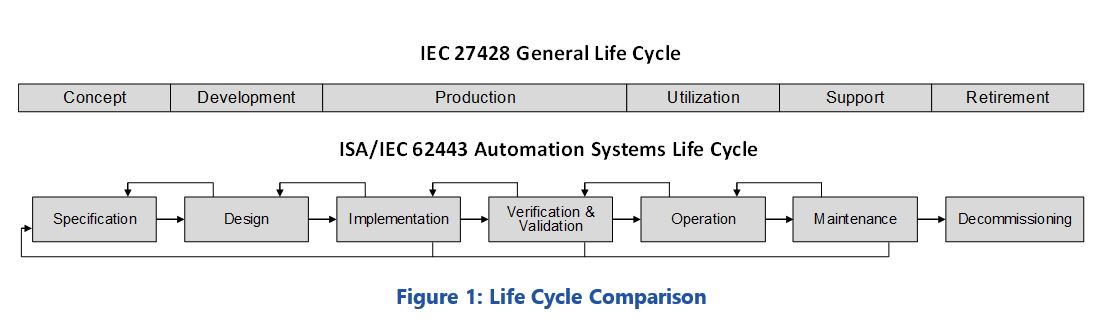

The IEC 24748 standard defines six life cycle stages:

The ISA/IEC 62443 standards have modified the general model slightly to fit better within the context of industrial system security. Figure 1 (above) compares the two models. In addition to changes to the names of some phases (e.g., “support” to “maintenance”) the production and utilization phases have been changed to the three separate phases of implementation, validation, and operation. This allows for additional emphasis on systems integration. Specific security-related responsibilities and tasks are associated with each phase of the revised model.

It is not critical for the asset owner to fully understand all the details and nuances associated with all the stages of this life cycle, but awareness of the life cycle is essential, along with the specific requirements that must be met at each stage.

A well-defined life cycle is important, but not sufficient in and of itself. A clear set of role definitions is also essential. The ISA/IEC-62443 standards define several principal roles in terms of activities, responsibilities, and accountability at various stages of the life cycle:

Those filling the above roles must collaborate and cooperate at each stage of the life cycle to achieve the desired results.

Adopting a life cycle approach and identifying and assigning principal roles are critical first steps in developing an effective cybersecurity program. This avoids the tendency to adopt “pin hole” views of the problem being addressed. However, several realities add to the complexity of the task. It is important to acknowledge, understand, and address each of these in a deliberate and measured manner.

It is important to remember that the technology and products employed in industrial systems are inherently flawed in that they are certain to include both identified and unidentified vulnerabilities. Some of these result from imperfect development practices; others may be the consequence of specific design decisions. Adding new technology to the system may or may not improve the level of security but will almost certainly increase complexity.

ARC Advisory Group clients can view the complete report at ARC Client Portal

If you would like to buy this report or obtain information about how to become a client, please Contact Us