As cloud platform providers and chip makers confront today’s already-massive and rapidly growing volumes of data, stakeholders are wrestling with how data processing should be best partitioned between the cloud and network edge. The current focus on the IoT in both the business and industrial sectors drives these discussions, which will be influenced to a significant degree by the current state of embedded AI at the device level.

It’s widely agreed that digital transformation and all the ecosystems of the IoT will generate huge volumes of data that must be processed and stored. At current growth rates, petabytes (one million gigabytes) are nothing more than rounding errors in the equation and will soon be replaced by exabytes (one billion gigabytes).

Clearly, these numbers are well beyond human scale and it is becoming equally clear that to handle this much data much of the processing will have to take place at the edge. It would take far too much computing resources, time, and actual energy to move all these data to cloud-based processing centers.

In addition to where these mountains of data will be processed, there is currently a debate on who (or what) will do the processing. Cloud platform providers maintain that massively scaled data centers are the most efficient way to churn away at the huge volumes of operational data produced by IoT devices every day. Device makers, conversely, believe they can process much of the data at the source, provided they can embed intelligent, purpose-built artificial intelligence (AI) and machine learning (ML) into the devices.

The edge will become more intelligent by necessity. Moving massive amounts of device and sensor data to the cloud will be too slow to efficiently process operational data needed in real-time industrial environments like automotive and other factory production settings.

There is continuing discussion about what exactly constitutes an intelligent edge, and the definition continues to evolve. Some in the edge device industry maintain the edge is between the IoT device and the cloud, and define it as a range of smaller vertically dedicated clouds. These “mini” clouds are more energy efficient and reduce the inherent latency of moving massive amounts of data from devices and sensors to large scale data center enterprise clouds. There appears, however, to be a consensus forming that embedded intelligence in the edge devices, powered by next generation processors designed specifically for AI and inference-based machine learning will drive the future of IoT and its implementation.

Artificial intelligence currently encompasses a very large variety of subfields. These range from the general (learning and perception) to the specific, such as driving an autonomous car; diagnosing diseases; laying out complex logistics; predictive, prescriptive, and self-healing machines and systems; robotic assistants; and running an autonomous factory. Machine learning represents one of these subfields and holds significant promise for distributed intelligence in an IoT system of systems. Basically, AI is relevant to any intellectual task.

The Turing Test, proposed by Alan Turing in 1950, was designed to provide a satisfactory operational definition of machine intelligence. A computer or an intelligent agent passes the test if a human interrogator, after posing some written questions, cannot tell whether the responses come from a human or a computer. Even in the current advanced state of AI research, the Turing Test remains relevant in determining machine intelligence. Today, for a system to be considered “intelligent,” it would need the following capabilities:

These six primary disciplines comprise most AI today and are employed to design and build the intelligent systems used across industry, transportation, business, infrastructure, smart cities, and the military.

The development and application of machine learning represents a major milestone in AI research and advancement and has revitalized the field of AI in recent years. ML is having a significant impact across virtually every market where it’s

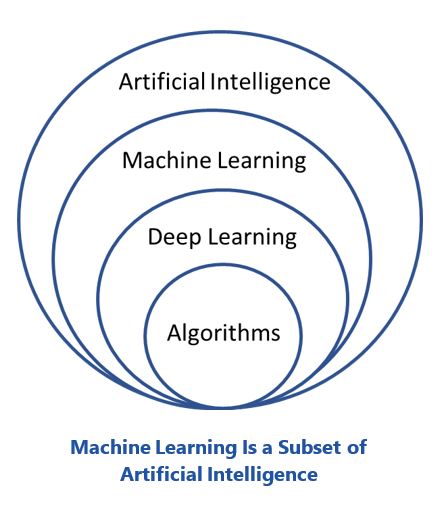

The terms AI and ML are often used interchangeably. However, in the context of data science, the terms are distinct. AI is an umbrella term relating to a combination of hardware and software that enables a machine or system to mimic human intelligence. A range of basic AI disciplines are used to deliver this “intelligence” including ML, computer vision, and natural language processing. ML, a subset of AI, uses statistical methods, mathematical probability, and pattern matching to enable “learning” through training algorithms, rather than being programmed through a rules-based approach.

Typically, ML systems process training data to improve performance on a task progressively and systematically, providing results that improve with experience. This learning-through-experience process mimics human intelligence. In the case of IoT, data is taken from the edge, whether it is an IoT device, edge server, or edge device, and sent to the cloud to be used for training. Once an ML system is trained, it can analyze new data and categorize it in the context of the training data. This is what is known as inference in the parlance of AI. Today, ML is performed in one of two locations:

While the first wave of ML focused on cloud computing, the next generation of processors from chip makers is enabling an order of magnitude increase of embedded intelligence in the edge device. The combination of improved techniques and methods for shrinking embedded design models to run on low-power hardware and vastly increased processing power on edge devices is opening real possibilities for significant differentiation and unit cost reduction.

ARC Advisory Group clients can view the complete report at ARC Client Portal

If you would like to buy this report or obtain information about how to become a client, please Contact Us

Keywords: Embedded Intelligence, AI, ML, IoT, Intelligent Edge Devices, Inferencing, Deep Learning, IoT Architectures, ARC Advisory Group.