Interpretable machine learning models provide insight into relationships between inputs and outputs. This enables them to not only produce predictions but also explain them. These interpretable techniques are also commonly referred to as white box machine learning models and are suitable for industrial process improvement.

Meet Susan. Susan has been working at a steel mill for the last 30 years. She is a continuous improvement manager. Her job is to reduce quality issues, improve product tolerances, and minimize costs. A lot has changed since the 80’s—the factory is now completely controlled by digital equipment, and Susan spends much more of her time in front of a computer, trying to make sense of all the data the mill generates.

Susan is an expert in process improvement techniques. She ran multiple Six Sigma projects in the past, in each case focusing only on a hand-picked subset of quality metrics and parameters. The insights she gained through these projects were valuable. But the tools simply couldn’t handle all the data from the entire process. She felt that she was missing the whole picture.

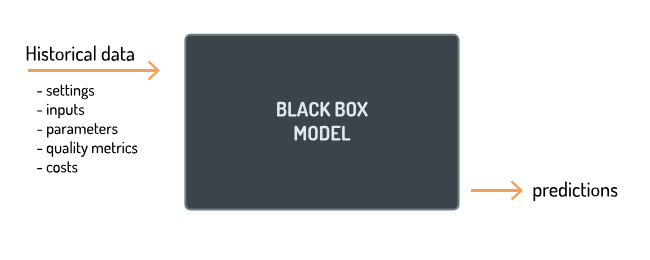

Machine learning is a computational technique that helps discover patterns of relationships from complex data sets. Let’s try to imagine machine learning models that could help Susan analyze her entire process. Susan has hundreds of settings, inputs, and parameters she can manipulate during the manufacturing process. One can imagine training machine learning models on the historical data of those settings and having that model predict optimal settings for Susan to recommend to her colleagues.

This is called a “black box” model. Black box machine learning models are excellent tools for prediction. They are great at learning how to make predictions based on historical inputs to a system. These black box machine learning models have seen widespread adoption in the technology sector, enabling companies like Google and Facebook to translate documents, develop self-driving cars, and recommend items for you to purchase next.

Black box machine learning models might work well for Amazon’s product recommendations, but they present Susan with some problems. First, she would have no way to understand how the model came to those optimal settings. Perhaps it was caused by a temperature sensor that sometimes has bad readings. Second, she couldn’t diagnose what went wrong in the bad runs to prevent them in the future. Third, she couldn’t validate the model’s findings against her thirty years of experience. Perhaps the machine learning models found a small amount of evidence in support of a relationship Susan always suspected but was never able to verify.

What Susan needs are machine learning models with insights. She needs to understand how a model makes predictions, so she can better do her job. Fortunately, there’s a cutting-edge branch of machine learning devoted to just this: interpretable machine learning. Interpretable machine learning models reveal the relationships between inputs and outputs. The result is a “white box” model that not only produces predictions, but also explains them.

The American Society for Quality estimates that quality issues, such as product recalls, can induce costs of in the range of 40% of total operations. Imagine having no more recalls because all your production is within specification. White box machine learning models bring us one step closer to making this a reality. With white box machine learning models, Susan can leverage all the data that is already generated from her steel mill. She can identify how each setting affects her quality metrics. She can explore interactions between the most important settings and gain a deeper understanding of what affects her process. Most importantly, Susan can easily diagnose which combination of settings lead to out of specification production and can plan to prevent such cases in the future.

Alp Kucukelbir Ph.D. is the co-founder and Chief Scientist of Fero Labs. He is an expert in machine learning and probabilistic programming and has published multiple papers with David Blei and Andrew Gelman. He is also an adjunct professor of computer science at Columbia University. Alp received his Ph.D. from Yale University, where he won the best thesis award.